Category: Devblog

-

Dynamic vertex formats

There are a variety of ways to send vertex data to the GPU. Since DX12 and Vulkan, we can choose to use the old-school input layouts definitions in the pipeline state, or using descriptors, which became much more flexible since the DX11-era limitations. Wicked Engine has been using descriptors with bindless manual fetching for a…

-

Vulkan Video Decoding

Recently the Vulkan API received an exciting new feature, which is video decoding, utilizing the built-in fixed function video unit found in many GPUs. This allows to the writing of super fast cross-platform video applications while freeing up the CPU from expensive decoding tasks.

-

Graphics API secrets: format casting

If you spend a long enough time in graphics development, the time will come eventually when you want to cast between different formats of a GPU resource. The problem is that information about how to do this was a bit hard to come by – until now.

-

Animation Retargeting

I recently implemented animation retargeting, something that’s not frequently discussed on the internet in detail. My goal was specifically to copy animations between similar types of skeletons: humanoid to humanoid. A more complicated solution for example, that can retarget humanoid animation to a frog was not my intention.

-

Derivatives in compute shader

This post shows a way to compute derivatives for texture filtering in compute shaders (for visibility buffer shading). I was missing a step-by-step explanation of how to do this, but after some trial and error, the following method turned out to work well.

-

Shader compiler tools

Wicked Engine used Visual Studio to compile all its shaders for a long time, but that changed around a year ago (in 2021) when custom shader compiling tools were implemented. This blog highlights the benefits of this and may provide some new ideas if you are developing graphics programs or tools.

-

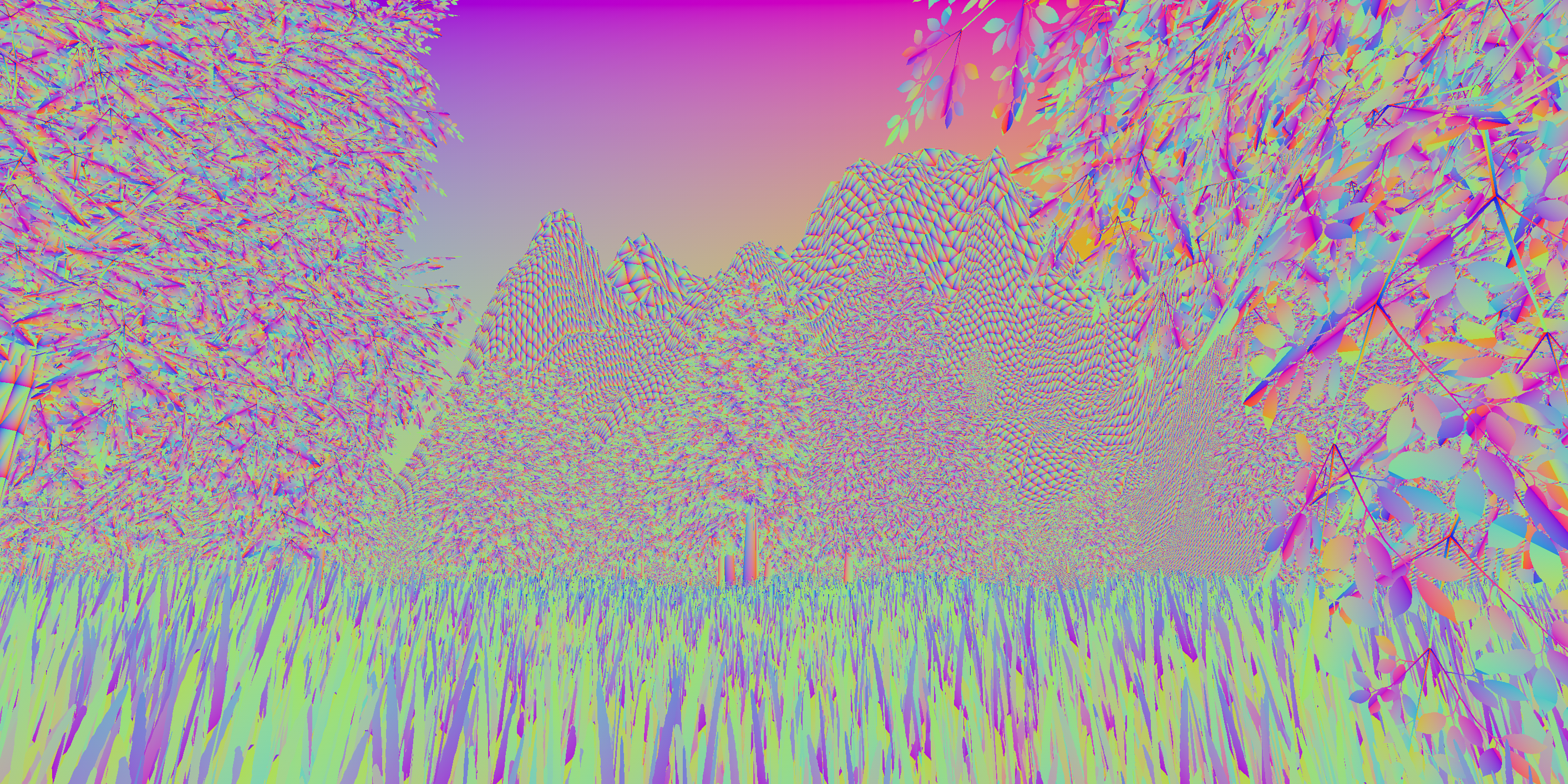

Future geometry pipeline

Lately I’ve been interested in modernizing the geometry pipeline in the rendering engine, for example reducing the vertex shaders. It’s a well known fact that geometry shaders are not making efficient use of the GPU hardware, but similar issues could apply for vertex and tessellation shaders too, just because there is a need for pushing…

-

Graphics API abstraction

Wicked Engine can handle today’s advanced rendering effects, with multiple graphics APIs (DX11, DX12 and Vulkan at the time of writing this). The key to enable this is to use a good graphics abstraction, so these complicated algorithms only need to be written once.

-

Bindless Descriptors

The Vulkan and DX12 graphics devices now support bindless descriptors in Wicked Engine. Earlier and in DX11, it was only possible to access textures, buffers (resource descriptors) and samplers in the shaders by binding them to specific slots. First, the binding model limitations will be described briefly, then the bindless model will be discussed.

-

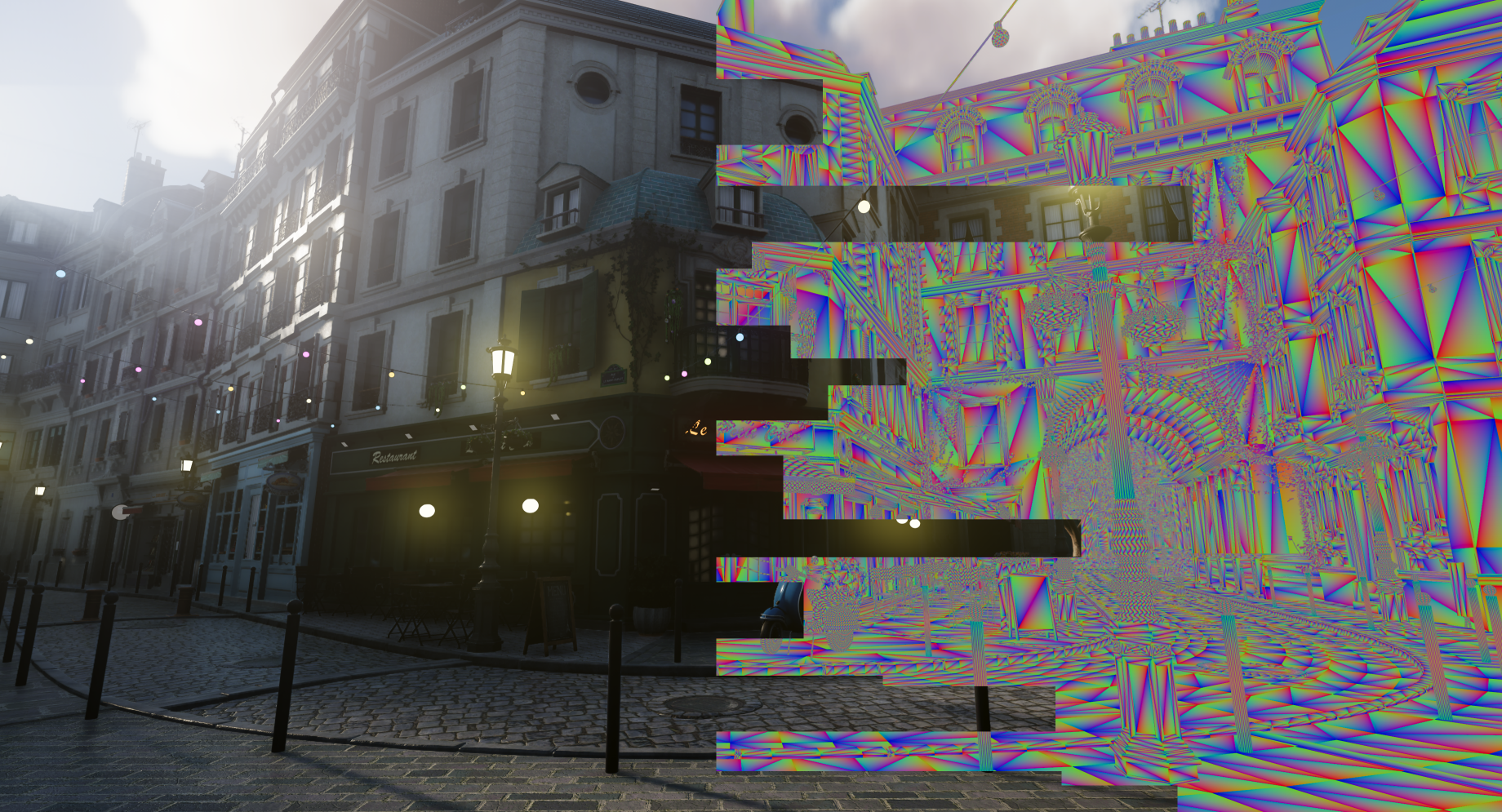

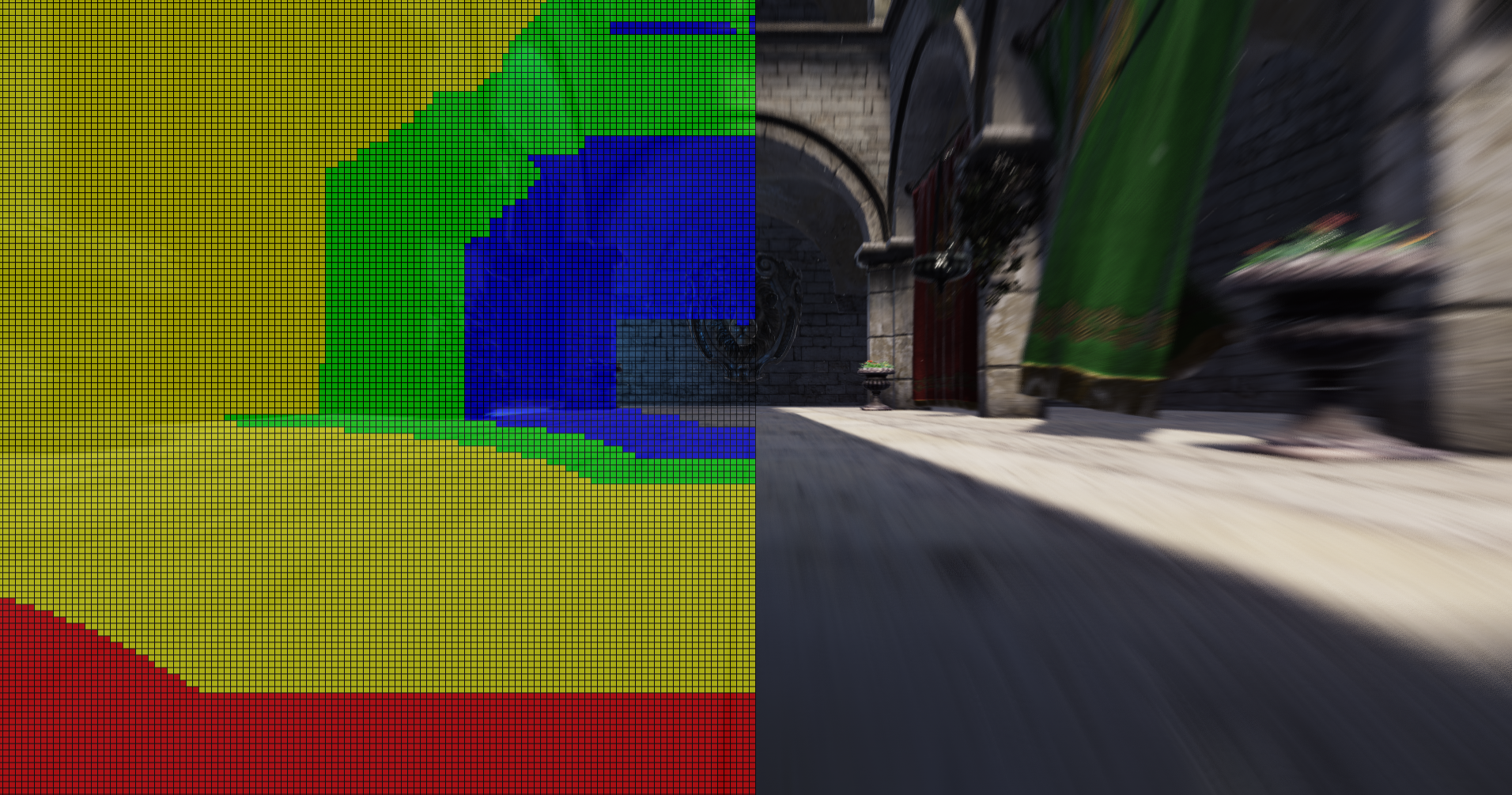

Variable Rate Shading: first impressions

Variable Rate Shading (VRS) is a new DX12 feature introduced recently, that can be used to control shading rate. To be more precise, it is used to reduce shading rate, as opposed to the Multi Sampling Anti Aliasing (MSAA) technique which is used to increase it.

-

Capsule Collision Detection

Capsule shapes are useful tools for handling simple game physics. Here you will find out why and how to detect and handle collisions between capsule and triangle mesh, as well as with other capsules, without using a physics engine.

-

Tile-based optimization for post processing

One way to optimize heavy post processing shaders is to determine which parts of the screen could use a simpler version. The simplest form of this is use branching in the shader code to early exit or switch to a variant with reduced sample count or computations. This comes with a downside that even the…

-

Entity-component system

Here goes my idea of an entity-component system written in C++. I’ve been using this in my home-made game engine, Wicked Engine for exactly a year now and I am still very happy with it. The focus is on simplicity and performance, not adding many features.

-

Improved normal reconstruction from depth

In a 3D renderer, we might want to read the scene normal vectors at some point, for example post processes. We can write them out using MRT – multiple render target outputs from the object rendering shaders and write the surface normals to a texture. But that normal map texture usually contains normals that have…

-

Thoughts on light culling: stream compaction vs flat bit arrays

I had my eyes set on the light culling using flat bit arrays technique for a long time now and finally decided to give it a try. Let me share my notes on why it seemed so interesting and why I replaced the stream compaction technique with this. I will describe both techniques and make…

-

Simple job system using standard C++

After experimenting with the entity-component system this fall, I wanted to see how difficult it would be to put my other unused CPU cores to good use. I never really got into CPU multithreading seriously, so this is something new for me. The idea behind the entity-component system is both to make more efficient use…

-

GPU Fluid Simulation

Let’s take a look at how to efficiently implement a particle based fluid simulation for real time rendering. We will be running a Smooth Particle Hydrodynamics (SPH) simulation on the GPU. This post is intended for experienced developers and provide the general steps of implementation. It is not a step-by step tutorial, but rather introducing…

-

Thoughts on Skinning and LDS

I’m letting out some thoughts on using LDS memory as a means to optimize a skinning compute shader. Consider the following workload: each thread is responsible for animating a single vertex, so it loads the vertex position, normal, bone indices and bone weights from a vertex buffer. After this, it starts doing the skinning: for…

-

Easy Transparent Shadow Maps

Supporting transparencies with traditional shadow mapping is straight forward and allows for nice effects but as with anything related to rendering transparents with rasterization, there are corner cases. Little sneak peak of what you can achieve with this:

-

Optimizing tile-based light culling

Tile-based lighting techniques like Forward+ and Tiled Deferred rendering are widely used these days. With the help of such technique we can efficiently query every light affecting any surface. But a trivial implementation has many ways to improve. The biggest goal is to refine the culling results as much as possible to help reduce the…

-

Next power of two in HLSL

There are many occasions when a programmer would want to calculate the next power of two for a given number. For me it was a bitonic sorting algorithm operating in a compute shader and I had this piece of code be responsible for calculating the next power of two of a number: uint myNumberPowerOfTwo =…

-

GPU-based particle simulation

I finally took the leap and threw out my old CPU-based particle simulation code and ventured to GPU realms with it. The old system could spawn particles on the surface on a mesh with a starting velocity of each particle modulated by the surface normal. It kept a copy of each particle on CPU, updated…

-

Which blend state for me?

If you are familiar with creating graphics applications, you are probably somewhat familiar with different blending states. If you are like me, then you were not overly confident in using them, and got some basics ones copy-pasted from the web. Maybe got away with simpe alpha blending and additive states, and heard of premultiplied alpha…

-

Forward+ decal rendering

Drawing decals in deferred renderers is quite simple, straight forward and efficient: Just render boxes like you render the lights, read the gbuffer in the pixel shader, project onto the surface, then sample and blend the decal texture. The light evaluation then already computes lighting for the decaled surfaces. In traditional forward rendering pipelines, this…

-

Skinning in a Compute Shader

Recently I have moved my mesh skinning implementation from a streamout geometry shader to compute shader. One reason for this was the ugly API for the streamout which I wanted to leave behind, but the more important reason was that this could come with several benefits.

-

Area Lights

I am trying to get back into blogging. I thought writing about implementing area light rendering might help me with that.

-

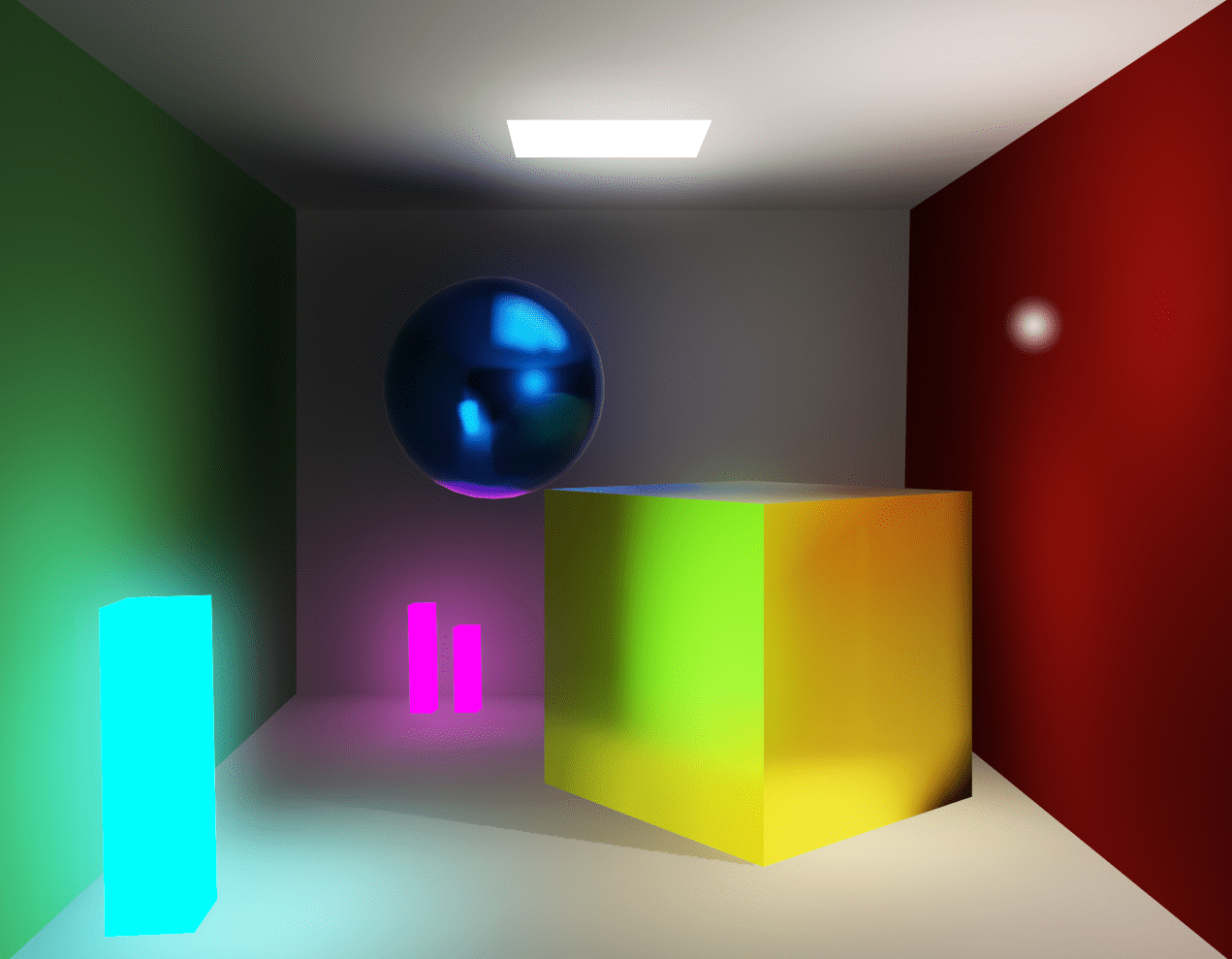

Voxel-based Global Illumination

There are several use cases of a voxel data structure. One interesting application is using it to calculate global illumination. There are a couple of techniques for that, too. I have chosen the voxel cone tracing approach, because I found it the most flexible one for dynamic scenes, but CryEngine for example, uses Light propagation…

-

Should we get rid of Vertex Buffers?

TLDR: If your only platform to support is a recent AMD GPU, or console, then yes. 🙂 I am working on a “game engine” nowadays but mainly focusing on the rendering aspect. I wanted to get rid of some APIs lately in my graphics wrapper to be more easier to use, because I just hate…

-

How to Resolve an MSAA DepthBuffer

If you want to implement MSAA (multisampled antialiasing) rendering, you need to render into multismpled render targets. When you want to read an anti aliased rendertarget as a shader resource, first you need to resolve it. Resolving means copying it to a non multisampled texture and averaging the subsamples (in D3D11 it is performed by…

-

Abuse the immediate constant buffer!

Very often, I need to draw simple geometries, like cubes, and I want to do the minimal amount of graphics state setup. With this technique, you don’t have to set up a vertex buffer or input layout, which means, we don’t have to write the boilerplate resource creation code for them, and don’t have to call the…

-

Smooth Lens Flare in the Geometry Shader

This is a historical feature from the Wicked Engine, meaning it was implemented a few years ago, but at the time it was a big step for me. I wanted to implement simple textured lens flares but at the time all I could find was by using occlusion queries to determine if a lens flare…

-

Welcome brave developer!

This is a blog containing development insight to my game engine, Wicked Engine. Feel free to rip off any code, example, techinque from here, as you could also do it from the open source engine itself: https://github.com/turanszkij/WickedEngine I want to post info from historical features as well as new ones. I try to select the ones…

You must be logged in to post a comment.